Efficient Earth Observation data processing relying on CODE-DE

In April 2020, a relaunch of the Copernicus Data and Exploitation Platform – Deutschland (CODE-DE) was announced. This was a big deal, since the second generation of the CODE-DE platform should now offer public access to the long awaited online processing environment. At this time, our WaCoDiS research project was in its final term and we were ready to validate our developments as part of a pre-operational deployment. Thus, the relaunch of CODE-DE just came at the right time.

CODE-DE early years

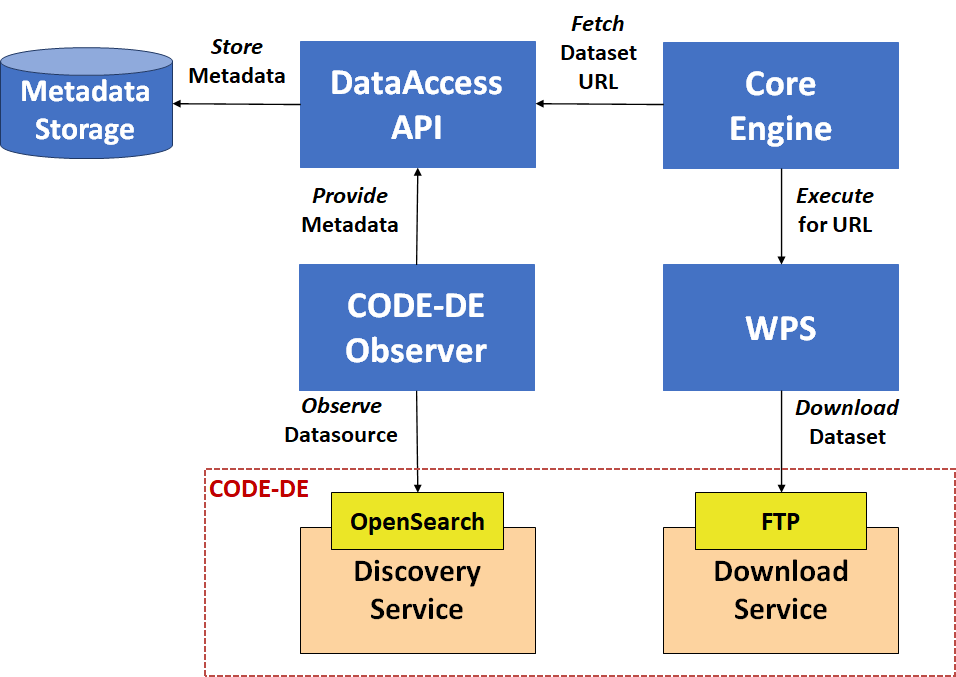

The CODE-DE platform was launched in March 2017 with the purpose of facilitating access to the European Copernicus program’s Earth Observation data. It explicitly addressed the needs of different German user groups, such as public authorities, research institutes and industries. The first generation CODE-DE platform provided different download and discovery services for Copernicus satellite data as well as a platform for linking related monitoring applications and sharing derived EO products. Conceptually, the CODE-DE already planned to provide a cloud-like processing environment to its users from the beginning. However, this useful feature never became production-ready and was only available for selected user groups as part of a proof-of-concept phase. Our WaCoDiS system interconnected to the CODE-DE discovery and download services, but was limited to a legacy offline processing workflow for large Earth Observation data.

WPS processing approach

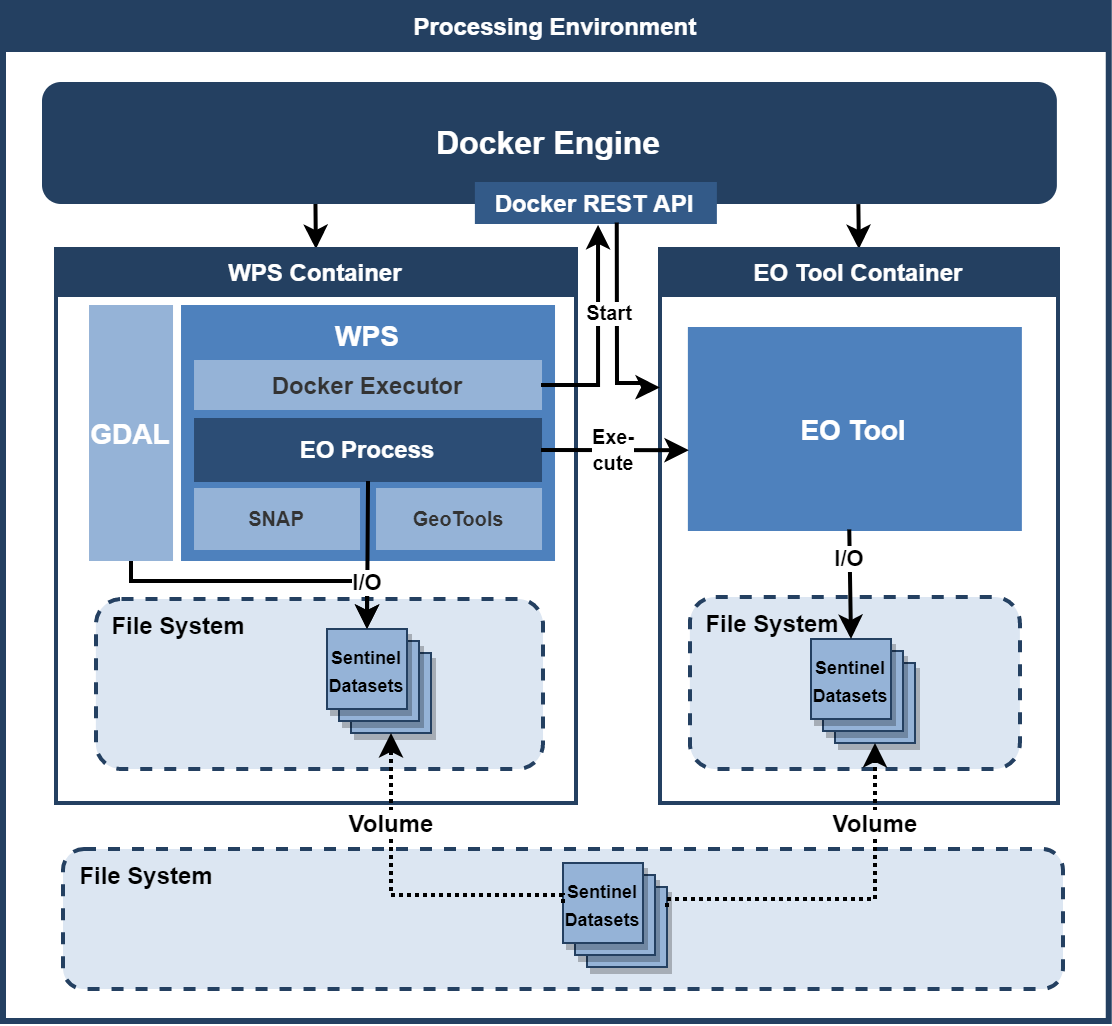

In the first two years of the WaCoDiS research project, we designed and implemented a system architecture for automated Earth Observation data processing (introduced with our first WaCoDiS blog post) We aimed to have it be as flexible and independent of any platform as possible. The core WaCoDiS System was built upon several microservices designed to run in a Kubernetes environment on arbitrary cloud platforms. In addition, we developed a strategy for interoperable EO data processing that relies on the OGC WPS standard, which we expected to be prepared for running on CODE-DE someday. This approach addressed several challenges:

- Platform independence:

The containerization of WPS instances as well as EO tools (custom developed algorithms e.g., Python scripts) enables the deployment within arbitrary environments which provide a container runtime. - Standardized process execution:

One or more WPS instances encapsulates arbitrary containerized tools that implement comprehensive EO data processing routines. - Reusability of existing tools:

We embedded renowned open-source tools, such as Sentinel Toolboxes, GeoTools and GDAL, within our WPS instance to exploit satellite data preprocessing routines.

The 52°North javaPS implementation, in combination with a custom backend we developed as part of WaCoDiS, serves as our WPS server.

CODE-DE 2. phase

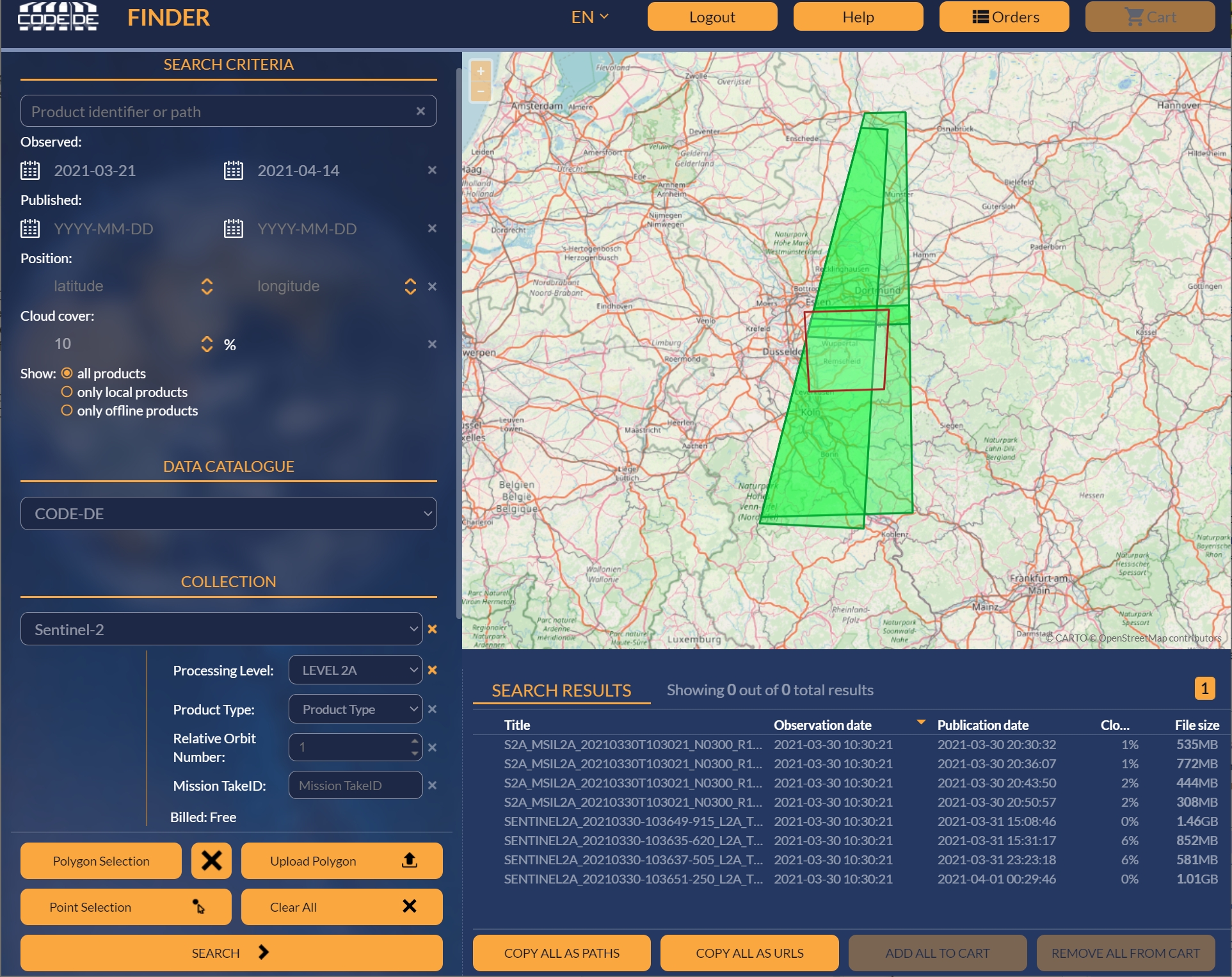

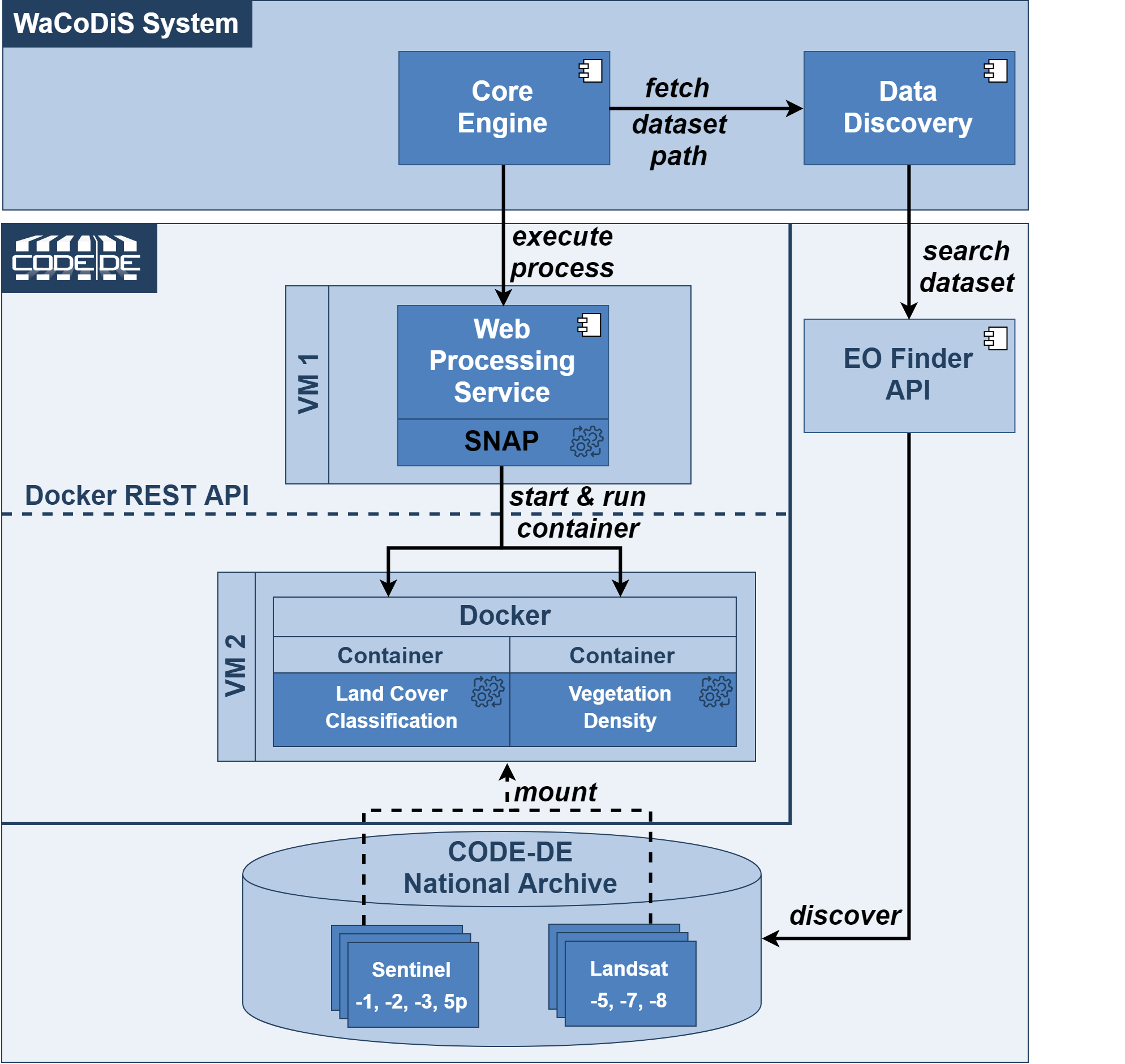

In the spring of 2020, the CODE-DE platform relaunch came as a surprise, since the second phase implied an extensive restructuring of the underlying architectures. Adapting the WaCoDiS System to changed APIs was not a big deal, so we integrated the EO Finder API (an OpenSearch based discovery API) quite fast. The biggest surprise was the evolution of CODE-DE, which was now meant to be an Infrastructure as a service (IAAS) platform. The platform now has an online processing environment (CODE-DE Cloud) that German user groups can access in order to deploy custom processing routines near the Copernicus satellite data’s physical location. This environment can be accessed via virtual machines that have direct access to Sentinel and Landsat collections. For this purpose, users can request virtual processing resources on demand and manage granted resources via an OpenStack Dashboard, since CODE-DE Cloud is built upon OpenStack.

WaCoDiS System Deployment on CODE-DE Cloud

We are now ready to validate our WPS-based processing approach within a pre-operational deployment set up on the evolved CODE-DE platform. Requesting access to CODE-DE Cloud was straightforward, since the CODE-DE providers encouraged research projects to try out the platform. Our WaCoDiS project was granted the use of the computing resources listed below:

- 8 vCPU

- 64 GB RAM

- 2 TB block storage

- 1 TB file storage

- 1 public IP

Using these resources, we set up two different virtual machines. Both are mounted with an object storage via S3 interface that contains the CODE-DE National Archive’s entire satellite data collection. The first VM hosts a WPS instance and has a public IP, so that access to the WPS interface can be attained from outside the cloud. The containerized EO tools are deployed on a second VM, that can be triggered by the WPS instance via a Docker REST API.

CODE-DE Cloud deployment evaluation

Our CODE-DE deployment scenario demonstrates an essential benefit regarding the processing of large Earth Observation data. There is no need to download certain datasets, since tools deployed within the CODE-DE cloud have direct access to these. You can discover the links to required satellite data via the EO Finder UI or the corresponding API and use these references to access certain datasets from within the cloud.

We did, however, detect a shortcoming regarding the tool execution within our architecture. The EO tools used are containerized, but do not run as stand-alone services. Thus, the WPS instances have to know the respective VM that hosts a certain tool container. This makes a scalable tool execution more difficult.

In summary, these are the most important findings of our WaCoDiS on CODE-DE evaluation:

- Processing tools directly operate on large datasets in the cloud, so there is no more need to preserve one’s own processing and storing capabilities.

- The WPS approach enables a seamless integration of CODE-DE Cloud into custom processing pipelines.

- Additional processing tools can be deployed with ease, since the WPS instance ensures its interoperable execution.

- To match the microservice paradigm, EO tools must be adapted to run as stand-alone services.

Conclusion and Outlook

As part of our system validation in WaCoDiS, we demonstrated the great potential of CODE-DE for EO data processing tasks. Traditional processing workflows based on downloading and local processing of satellite image data are quite inefficient. Relying on the big players in cloud computing often does not suffice to fulfill the requirements of the Earth Observation community, since their product portfolio is quite small. Our WPS based processing approach bridges the needs for efficient online processing workflows as well as for interoperable tool execution. This also reduces the possibility of a vendor lock-in.

Since we had such a great experience with the CODE-DE platform, we will study its capabilities in additional research projects. We would especially like to mention MariGeoRoute, a MariData subproject, which aims to find innovative solutions for optimizing ship routing. As part of the project, we develop machine learning based approaches which operate on data from the Copernicus Marine Environment Monitoring Service and are also available within the CODE-DE Cloud. So, stay tuned.

This work has been funded by the Federal Ministry of Transport and Digital Infrastructure (Germany), BMVI, as part of the mFund program.

Leave a Reply