This is the last blog post I will be making for the Seismic SOS project!. If anyone has been keeping up with or has read my weekly reports page you will see that it has been a huge learning process for me, and I just want to say that I am very grateful to have been given this opportunity by 52°North, GSOC 2013, and the open source community.

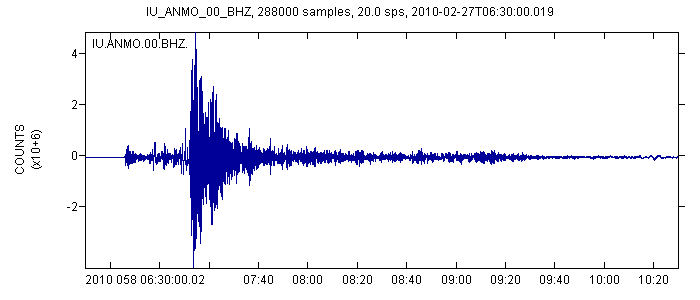

I will start out with a summary recapping the project’s purpose. I was given the idea for a Seismic SOS from an earthquake data analysis class I took at Cal Poly University. The location and parameters of the station sensing the seismic waves are very important in making meaningful observations, and I thought this would tie in nicely with the geospatial sensing platform of the Sensor Observation Service and Sensor Web. The plan for this summer developed into the creation of Seismic SOS, a query based proxy server designed to pull seismic data and events from the IRIS DMC Web Service, based on the location of sensor stations and specific earthquake events. As of now, the server is able to pull raw waveform data based on a station and a time period to create timeseries graphs (figure 1) and earthquake event magnitudes based on specific event information.

Figure 1

The first portion of work during the first half of the summer involved getting up to speed on the code and the SOS development api. Also, the data model and method of data transportation was devised. This turned out to be quite challenging and a lot of the process is explained in my midterm report from earlier this summer. Note that the data models have been changed a bit since then and will be added during documentation.

Once I was familiar with the code, development on the data access object (DAO) operation services was much more efficient. I was going for the simplest possible set up for operation requests. This involved a “cache feeder” DAO that feeds online stations and events into the cache and creates identifiers for them as procedures/offerings in the server, where it can be accessed by the GetCapabilities operation. The second DAO developed was one to create a description for a given sensor. A requested cached procedure would trigger a query from the IRIS station select web service to gain information regarding valid time periods, location, and other attributes of the station, to be converted into SensorML and returned in the response. The final DAO is the GetObservation DAO, which is used to query the actual data. For event procedures, this only involves querying the earthquake magnitude associated with a cached FeatureOfInterest (in this case an event id). For station procedures, it involves passing a valid time period to return the raw data required to graph the seismic waveform during this time, at the station location. Throughout all of these services, the most challenging part was making sure the data and data relationships conformed to the SOS’s highly modular design.

I am proud of all the work I have put in on this project, but the truth is that my goal is only half done. Sensor Web, where seismic data could be shown together with its location on a map, is what I really wanted to see happen when I proposed the project. It was a bit too ambitious for someone with my experience and only one summer. So, I hope to continue my relationship with 52°North as a developer and begin to work on Seismic SWE over the course of this school year.

Sincerely,

Patrick Noble

52°North

Leave a Reply