This is the midterm update for my GSOC project to integrate seismic data support in SOS. My progress was relatively slow at the start due to a learning curve related to the SOS code base. I have made a lot of progress recently. My main goal for the midterm milestone was to have the SOS set up as a proxy data source querier that could take requests from the server and return seismic data, in time series and/or event format. I wanted to be able to create my own instance of SOS where it was possible to use operations such as GetCapabilities, DescribeSensor, and GetObservation to pull data and simulate what a user might ask for in the SWE client. For details on this architecture, please refer to the SOS Wikipedia page.

The main issue I had during the first portion of development was trying to figure out which query tool to use, ObsPy or SeisFile. I initially chose ObsPy, since I have used it for all my earthquake analysis. However, it is written in Python, so I would have to use Jython to integrate it into the Java framework of SOS. This brought the biggest problem, since dependencies for libraries are handled differently by Java, Jython, and Python. In the end, I decided to use SeisFile, the Java based seismic data querier because of this difficulty. SeisFile has worked perfectly in the SOS thus far.

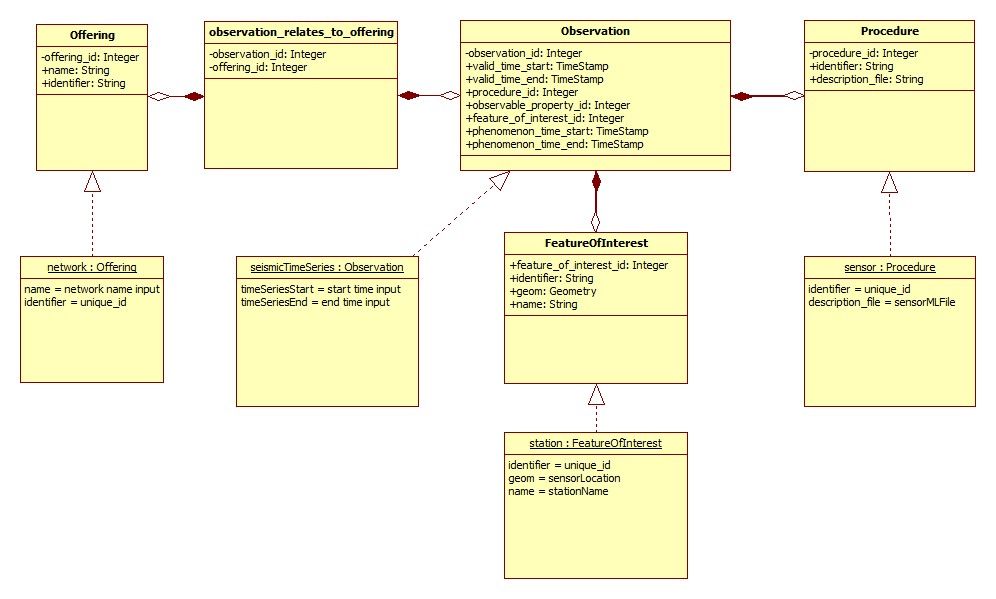

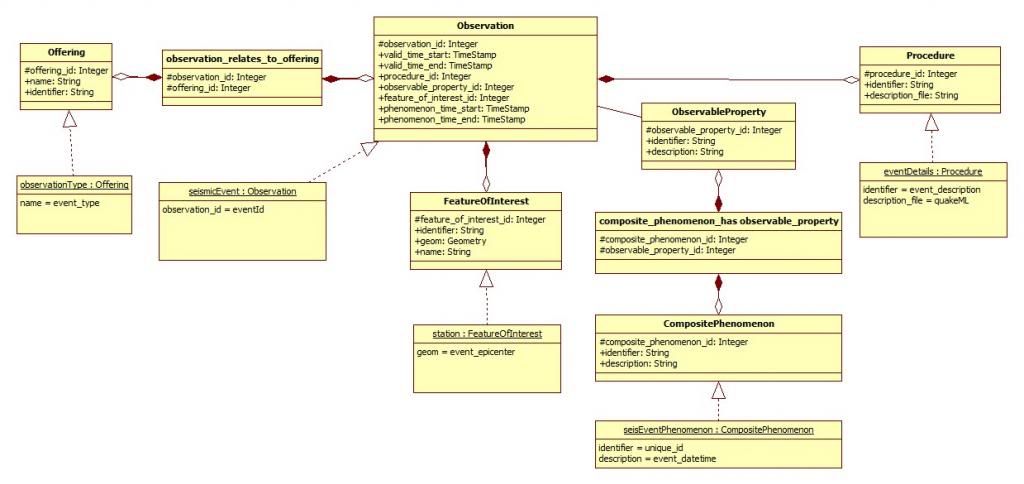

The second challenge was mapping the data into the SOS. This was slightly less difficult since its object oriented structure was familiar to most Java projects out there. However, the design is meant to be extremely modular, which brings on extra overhead and complexity in order to support a wide variety of data formats. In this case, I am implementing data model support for seismic time series data, as well as seismic events, and the general formats in UML are shown below:

Now that scripts have been written, and data models have been chosen, I have been focusing work on the core functionality and shifting the SOS from the current “Hibernate” data access objects (DAO) to DAOs that act as the seismic data proxies. This will involve cloning the current classes while still implementing the current interfaces to stay consistent with the modular design of the SOS. Also, the new classes must be referenced by the webapp. I picture that the next oncoming challenge will not be the querying itself, but making sure that the data fits into the modular puzzle that is the SOS data structure. But, if I implement the interfaces and abstract classes correctly, the data will funnel in and arrange itself into the usable format designated by the object model I have created.

The cache is currently being fed with information regarding valid query parameters. It is now a matter of referencing the new DAOs to the webapp so that the server can actually request the queries. I will be making continuous reports on my weekly reports page if you would like to stay posted on progress.

Leave a Reply