Introduction

There are a lot of reasons why it is useful to have your phone with you in the car and enviroCar encourages you to do so. At the same time, it’s a potentially dangerous distraction to use your phone while driving. Interacting with the enviroCar Android app while driving is a serious concern, as it may result in an accident.

Addressing the issue, the enviroCar: Voice Command project kicked off during the Google Summer of Code 2022 with an exciting and forward-thinking vision in mind: making enviroCar a hands-free experience. The project aimed to integrate voice commands into the app, allowing users to perform actions like starting or stopping the track recorder and selecting a car through simple voice instructions. Additionally, the project introduced wake word functionalities to initiate voice commands and laid the groundwork for future enhancements.

Building upon the progress made last year, this year’s focus will be on expanding and refining the project’s functionalities, potentially preparing it for a significant release. The primary goal of the app remains centered around implementing voice control for key app features and ensuring the smooth functioning of the voice assistant in various situations.

About enviroCar

enviroCar Mobile is an Android application for smartphones that can be used to collect Extended Floating Car Data (XFCD). The app communicates with an OBD2 Bluetooth adapter while the user drives. This enables read access to data from the vehicle’s engine control. The data is recorded along with the smartphone’s GPS position data. The driver can view statistics about his drives and publish his data as open data. The latter happens by uploading tracks to the enviroCar server. The data can also be viewed and analyzed via the enviroCar website. enviroCar Mobile is one of the enviroCar Citizen Science Platform’s components.

Project Goals

1. Improving the accuracy of wake word detection

Wake words are voice triggers for activating a virtual assistant. Wake word detection in the enviroCar app was implemented as part of the GSoC 22 project. The previous implementation used AimyBox with PocketSphinx speech kit, and it works offline. Similar to Google Assistant, which is triggered by saying “Okay, Google”, the enviroBot is currently triggered by “enviroCar listen”.

The problem here is the accuracy of this wake word detection, there are hits-and-misses. Improving the accuracy of which is the primary goal. Good open-source options for wake word detection in Android are limited.

The accuracy of the current PocketSphinx model can be improved, using techniques:

While this is one of the strategies, utilizing better phonemes and lexicons could be another. I also happen to be exploring new alternatives. I am currently working on testing out some updated models by Vosk in the mobile app. Both Vosk and PocketSphinx models are trainable and open source. Using these techniques, we can make positive changes and improve accuracy.

2. Expanding voice command flows further

A voice command is a speech instruction given by the user to the bot. These voice commands are used to control the app hands-free. To understand and interpret these voice commands, we utilize a Rasa NLP/NLU, which helps classify the intent behind the user’s speech and enables a more natural and conversational interaction.

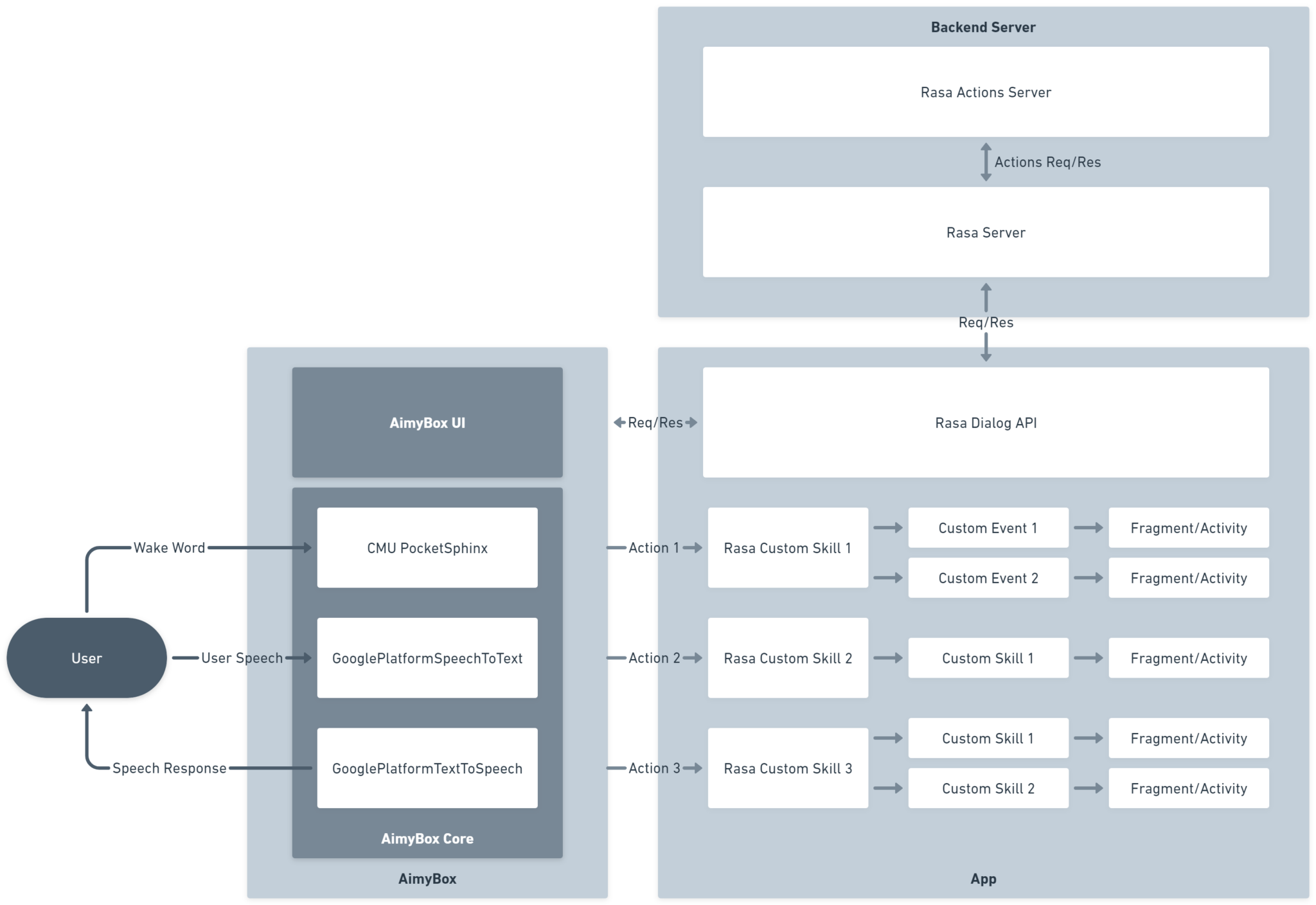

A robust architecture was already created specifically for handling Voice Commands in GSoC ‘22. The architecture primarily involves the following functional components:

- AimyBox: Handles wake word detection, text-to-speech, and speech-to-text engines.

- DialogAPI: Interacts with the Rasa server and performs necessary actions on the app.

- Rasa NLU & Actions Server: It classifies the intent behind the user’s text.

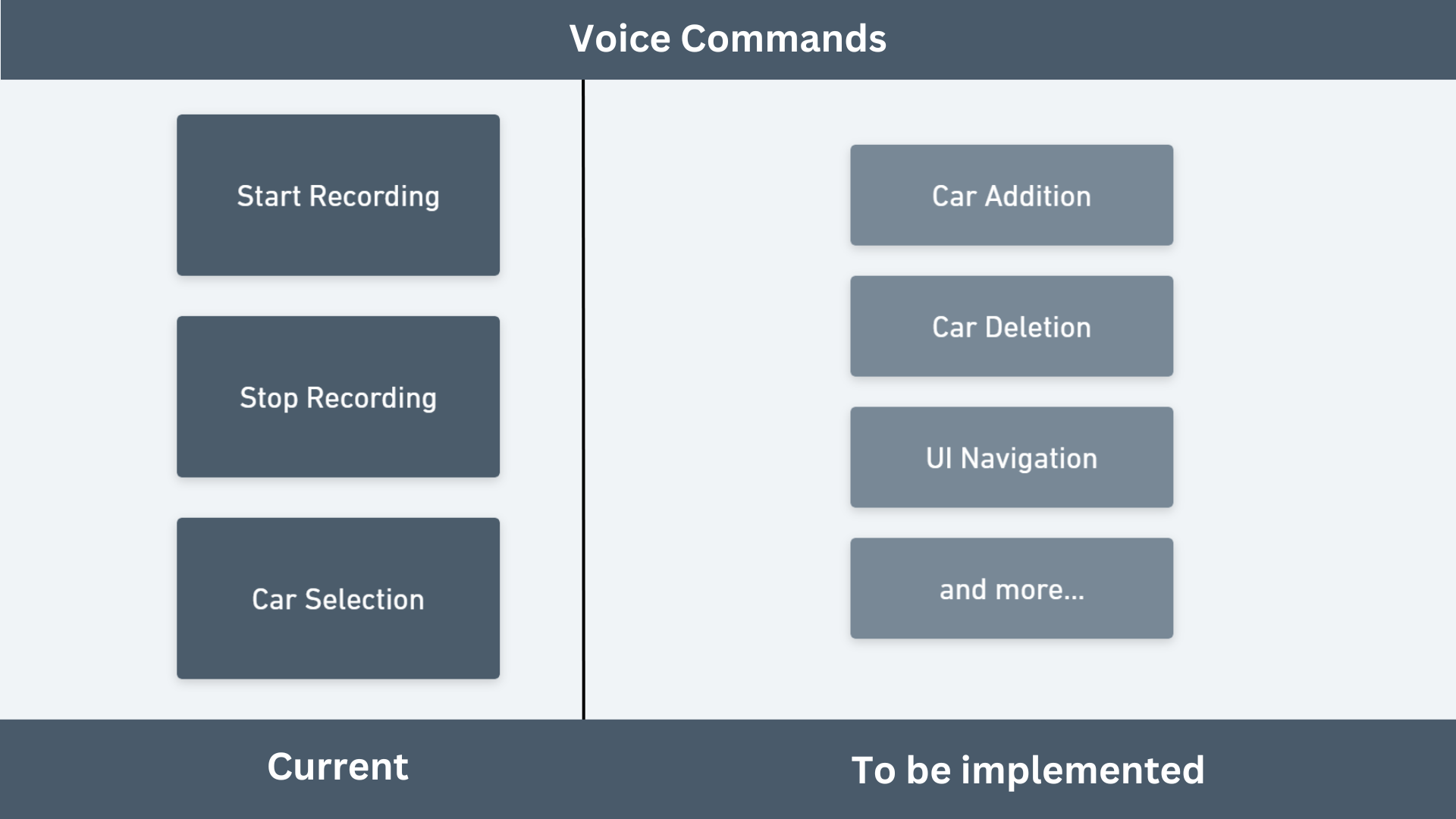

Currently, the project has implementations of the following 3 voice commands:

- Start Recording

- Stop Recording

- Car Selection

This is just a small portion of all the hands-on functionalities you can perform on the enviroCar app. The second goal is to expand this further by adding newer voice commands for the other major functionalities of the app.

3. Stabilizing the bot

The modularity and architecture of the bot are well thought out. This architecture also utilizes ViewModels and Coroutines in making the bot functional throughout the app, on any activity.

There are certain known issues with the implementation that need to be corrected. Known bugs like multi-instantiations of AimyBox, incorrect function calls, etc. are to be resolved. We also plan to enable a proper scan of the code to scout for any other pertaining issues or vulnerabilities.

Additionally, natural conversations do not always follow a well-defined pattern, they are free-flowing, with context changes and interruptions in between. The goal is to make enviroBot capable of handling interruptions and intent changes between stories or forms with ease.

Once we’ve attended to these issues, the enviroBot will be more stable, powerful, and ready for a major release in the future.

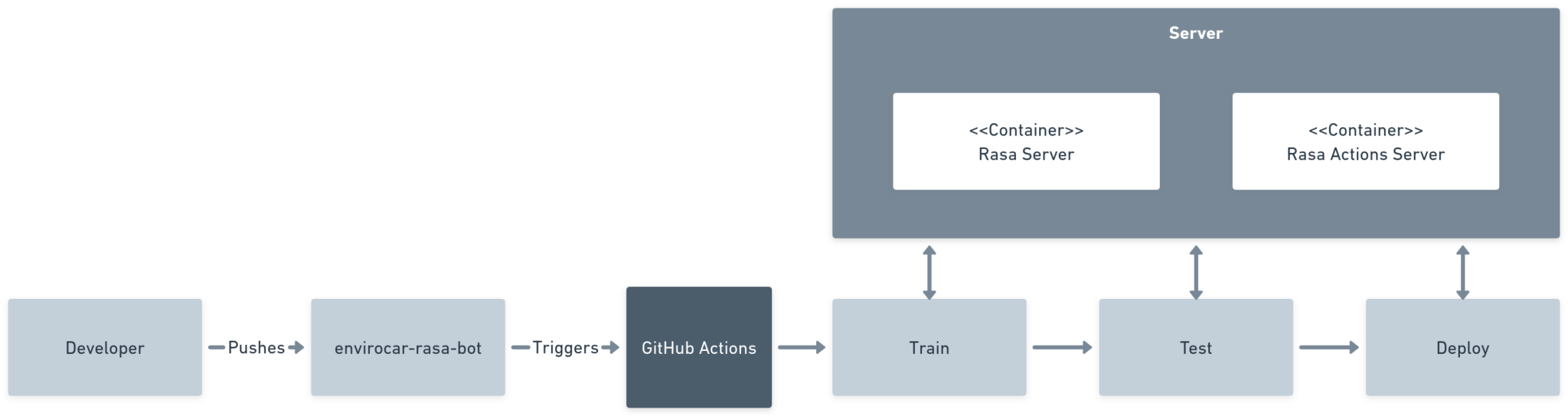

4. Developing CI/CD pipeline for enviroCar Rasa bot:

The enviroCar-Rasa-bot has already been developed on the microservices architecture. For reference, microservices architecture is the preferred software architecture for modern scalable applications. A microservice application is composed of multiple small independent services that work together to provide a complete system. Each service typically has its own operating environment.

The benefits of using a microservices architecture comprise:

- Scalability: Individual services can be scaled independently based on their specific needs. This is particularly important for a chatbot.

- Faster development cycles: Each service can have its own independent release cycle.

- Continuous Integration/ Continuous Deployment: This enables container-based CI/CD pipelining.

What is CI/CD?

It is basically a set of practices and tools used to automate the process of building, testing, and deploying application changes.

CI involves the frequent and automated integration of code.

- It requires developers to regularly push their code changes to a version control system.

- An automated workflow is triggered to compile the code and build the software.

- If the builds and tests are successful, the changes are integrated into the main codebase.

CD is about automating the release and deployment of software changes to production environments.

- It involves testing, after a successful integration.

- If the tests pass, the changes are automatically deployed to a staging or production environment.

The goal here is to develop a pipeline that will automate the manual processes of the Rasa bot, as well as a pipeline for testing the new changes and generating a report of the effects it has on the accuracy of classification. The testing pipeline (workflow) for this is already being developed.

5. Writing tests

Testing is an important aspect as it helps to identify and fix bugs and ensure that the app functions correctly for a wide range of scenarios and possibilities. I will be incorporating testing early into the development process to ensure that any issues are identified and resolved in the sprint itself.

Goals include the development of Functional and Performance tests for the Android app and Test Stories for the Rasa backend. Android Instrumental Testing tools will be used for writing Unit and Integration tests.

About Me

I am Ayush Dubey, a pre-final year Computer Science Engineering student from New Delhi, India. I am a nerd, a techy, and a science enthusiast with a love for developing software systems. I have experience working as a Backend Developer Intern at the Indian Institute of Technology Kanpur (IIT Kanpur). I deeply value Open Source projects and contributions and enjoy working with open-source communities and technical societies on impactful projects. When away from my keyboard, you’ll find me jamming on my guitar or listening to some quality rock, or babbling about theoretical physics and astronomy. This will be my first Google Summer of Code. I am really excited and looking forward to some great learnings and contributions under the guidance and mentorship of Dhiraj Chauhan.

Let’s connect 👋: LinkedIn, Twitter

Leave a Reply